1. What is mOS?

Stateful middleboxes such as intrusion detection systems and stateful firewalls rely on TCP flow management to keep track of on-going network connections. Implementing complex TCP state management modules for network appliances in high-speed networks is difficult. This is especially more challenging due to the lack of a reusable networking stack that provides a development interface that monitors fine-grained flow states for stateful middleboxes. As a result, developers often end up implementing customized flow management submodules for their systems from scratch.

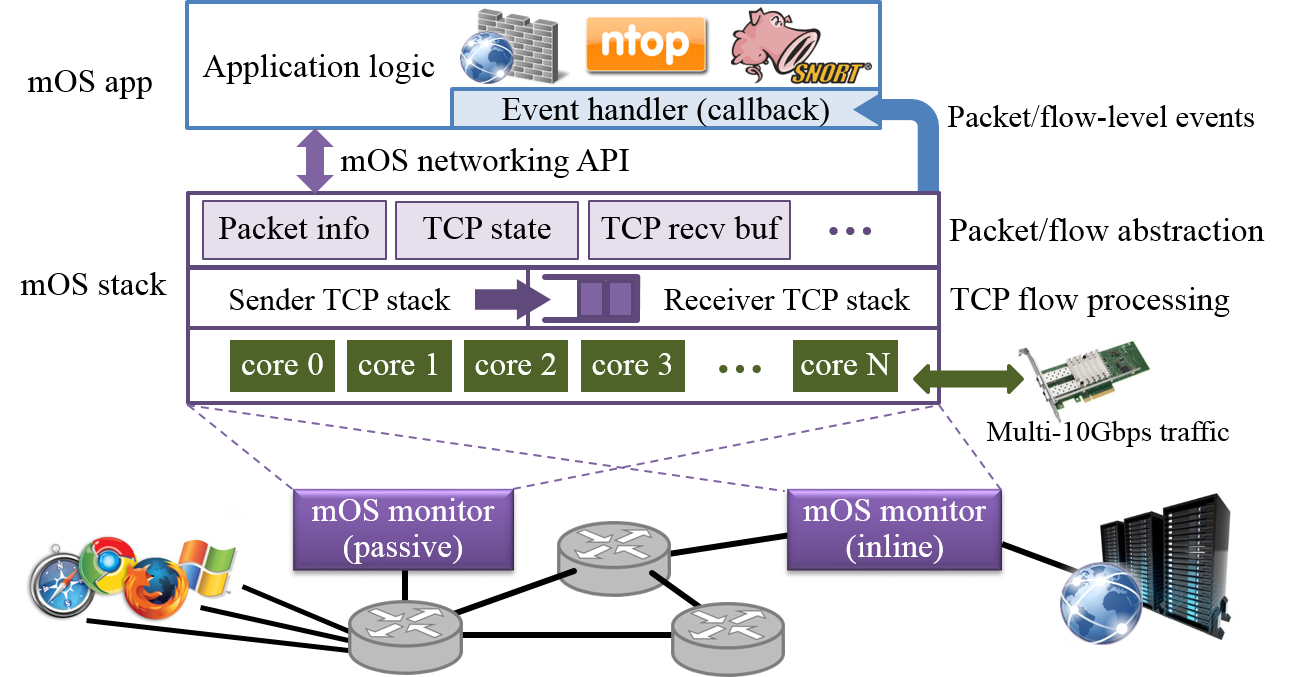

Our long term goal is to build a middlebox development system named mOS (or middlebox Operating System). Our first release is its networking stack which offers a high-performance user-space programming library specifically targeted for building software-based stateful monitoring solutions (Figure 1). Our library interface provides powerful event-based abstractions (see section 3) that can potentially simplify the codebase of future middleboxes that are developed on the mOS networking stack. We find that applications ported on mOS are more modularized than their counterparts as our stack can handle intricate TCP state management tasks internally; leaving the applications to solely focus on custom middlebox logic.

mOS is an extension of our earlier work: mTCP, which is a high-performance user-level TCP/IPv4 stack. Our evaluation of mOS applications show that:

- mOS programs are more modular and are smaller in terms of source lines of code when compared to their existing counterparts.

- Applications built on mOS deliver highly scalable performance.

2. Benefits of mOS Networking Stack

Our API can help middlebox developers build a wide range of next-generation of L4~L7 applications such as IDSes/IPSes, NATs and L7 protocol analyzers. Users can also build L2~L3 network tools that do not require stateful processing such as bridges, routers and packet level redundancy elimination systems. mOS offers three main benefits:

-

The framework can simulate TCP states of both endpoints

while at the same time it allows the programmer

to dynamically fine-tune the

level of management for individual connections. For example, some applications require full TCP processing including bi-directional bytestream reassembly support (e.g. signature-based IDSes such as Snort). Other network monitors only require inspection on the client-side (such as TCP/UDP port blockers) that don't even require bytestream analysis. mOS allows users to dynamically adapt to such demands of the applications. - Its modular design provides an intuitive abstraction interface that a programmer can use to build custom middlebox applications. Moreover, our library can be easily extended to support higher-layer (L6~L7) protocols.

- mOS is an extension of the mTCP stack which performs well in high speed networks. It works on any commodity multi-core machines that host multi-queue NIC(s). Please refer to our requirements page for more details.

3. mOS Application Programming Interface

Existing open-source stateful middleboxes often have flow management modules tightly integrated with their core logic that can lead to maintainence issues in the long term (due to complexity of the code). Our interface provides clean separation of the middlebox logic from the networking core on a key observation: we find that most middlebox operations can be defined as a set of flow-related events; and their respective callback handlers can be used to construct customized logic.

The mOS networking API provides programmers an elaborate event interface for building middlebox applications. We have identified a list of key flow-related network conditions known as built-in events that are relevant for middleboxes. An application registers for events and thereafter mOS triggers corresponding callback handlers every time these events are detected by the stack. The maintainence of flow states and generation of registered events are all managed internally by the networking core. We realize that mOS built-in events may not capture all possible flow conditions that can take place in a network. To address this, we also provide an API to help developers create their own custom events called user-defined events.

Along with the event interface, we also extend the mTCP socket interface to introduce a new type (MOS_SOCK_MONITOR_STREAM) that can be used to monitor individual flows inside the registered event callback handlers.

The following code snippet explains how the events and socket abstractions can be used in a typical mOS application:

static bool

IsSYN(mctx_t m, int sock, int side, event_t event)

{

struct pkt_info p;

// Retrieve flow's last packet

mtcp_getlastpkt(m, sock, side, &p);

return (p.tcph->syn && !p.tcph->ack);

}

static void

OnFlowStart(mctx_t m, int sock, int side, event_t event)

{

struct pkt_info p;

// Retrieve flow's last packet

mtcp_getlastpkt(m, sock, side, &p);

// initialize per-flow user context

struct HTTPContext *http_c = calloc(sizeof(struct HTTPContext));

http_c->sport = p.tcph->source;

...

mtcp_set_uctx(m, sock, http_c);

}

static void

OnFlowEnd(mctx_t m, int sock, int side, event_t event)

{

// free up per-flow user context

free(mtcp_get_uctx(m, sock));

}

static void

mOSAppInit(mctx_t m)

{

monitor_filter_t ft = {0};

int s;

// create a passive monitoring socket & set up its traffic scope

s = mtcp_socket(m, AF_INET, MOS_SOCK_MONITOR_STREAM, 0);

ft.stream_syn_filter = "dst net 216.58 and dst port 80";

mtcp_bind_monitor_filter(m, s, &ft);

// set up user-defined event for OnFlowStart()

event_t udeSYN = mtcp_define_event(MOS_ON_PKT_IN, IsSYN, NULL);

// register event handlers

mtcp_register_callback(m, s, udeSYN, MOS_HK_SND, OnFlowStart);

mtcp_register_callback(m, s, MOS_ON_CONN_END, MOS_HK_SND, OnFlowEnd);

mtcp_register_callback(m, s, MOS_ON_PKT_IN, MOS_HK_SND, processHTTP);

}

In the code above, mOSAppInit() first creates a passive monitoring socket (we introduce a new socket type for this purpose called MOS_SOCK_MONITOR_STREAM). We can restrict the kind of traffic the monitor can scan by using the mtcp_bind_monitor_filter() function. We register three events we want to monitor: one at the start of the connection (on SYN request), the other for packet arrival (the callback handler is not shown) and the last on connection termination. In the example, we use two built-in and one user-defined mOS events for this purpose. A developer can register for these events either on the sender or receiver end of the stack (see next section for details). The registered event triggers OnFlowStart() and OnFlowEnd() functions for connection initiation and termination respectively. After the initialization of the application, mOS calls the callback handlers for those flows that trigger the registered events. For OnFlowStart() example function, the mOS core passes the active monitoring socket that is unique for each connection, a side variable (to indicate whether it is a client or a server) and the registered event id.

4. mOS Networking Model

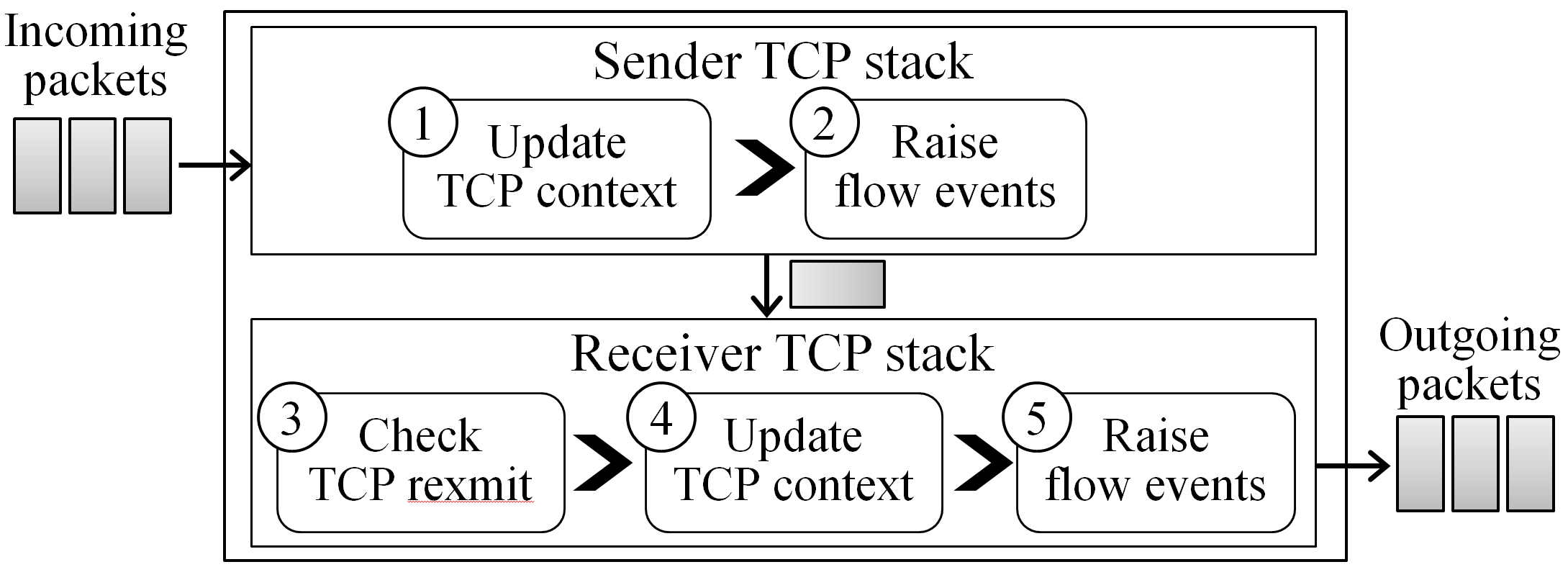

A typical mOS (per-core) runtime instance is illustrated in Figure 3. The mOS core can be seen as a coupling of two mTCP stacks that simulate both TCP endpoints. An ingress packet first runs through the sender-side1 stack. The packet triggers an update to its TCP state and records all relevant events.

Next, the mOS core triggers callback handlers (MOS_HK_SND hook) if a mOS application has already registered for a subset of these events. It then checks whether the packet is, in fact, retransmitted. Finally, this process is repeated with the receiver-side stack (MOS_HK_RCV hook).

5. Evaluation

Our experience with porting some middlebox applications with the mOS networking stack has been positive.

-

Code reduction: We ported Abacus, a cellular data accounting

flow monitoring system that detects "free-riding" attacks. The original Abacus

version relies on a custom flow management module that spans 4,639 lines of code. When

we port Abacus to mOS API, the size of the program is reduced to only 561 lines of code (88% code

reduction).

- Performance: We also ported Snort3, a multi-threaded signature-based software NIDS. By using mOS event-based API, we can replace Snort's stream5 flow management and http-inspect (HTTP preprocessor) modules. Our results show that we achieve analyzing throughputs similar to the Snort3-DPDK setup.

6. Publications

-

"A Case for a Stateful Middlebox Networking Stack"

Muhammad Jamshed, Donghwi Kim, YoungGyoun Moon, Dongsu Han, and KyoungSoo Park

in the SIGCOMM Computer Communication Review. Volume 45 Issue 4, Pg 355-356, October 2015. - A full paper version is in submission.

7. Source code

The code will soon be released on github.

Our release contains the source code of mOS library, sample applications, and a detailed tutorial on how to program stateful middleboxes using mOS API. You can refer to our detailed documentation on how to build and install new mOS applications.

8. People

Students:

Muhammad Asim Jamshed,

Donghwi Kim, and

YoungGyoun Moon

Faculty:

Dongsu Han, and

KyoungSoo Park

We are collectively reached by our mailing list: mtcp at list.ndsl.kaist.edu .